Table of Contents

- 1. Introduction

- 2. Java Persistence API

- 1. Introduction

- 2. Why JPA?

- 3. Java Persistence API Architecture

- 4. Entity

- 5. Metadata

- 6. Persistence

- 7. EntityManagerFactory

- 8. EntityManager

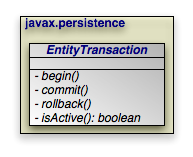

- 9. Transaction

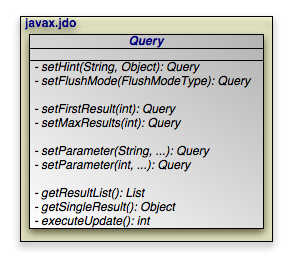

- 10. JPA Query

- 1. JPQL API

- 2. JPQL Language Reference

- 2.1. JPQL Statement Types

- 2.2. JPQL Abstract Schema Types and Query Domains

- 2.3. JPQL FROM Clause and Navigational Declarations

- 2.4. JPQL WHERE Clause

- 2.5. JPQL Conditional Expressions

- 2.5.1. JPQL Literals

- 2.5.2. JPQL Identification Variables

- 2.5.3. JPQL Path Expressions

- 2.5.4. JPQL Input Parameters

- 2.5.5. JPQL Conditional Expression Composition

- 2.5.6. JPQL Operators and Operator Precedence

- 2.5.7. JPQL Between Expressions

- 2.5.8. JPQL In Expressions

- 2.5.9. JPQL Like Expressions

- 2.5.10. JPQL Null Comparison Expressions

- 2.5.11. JPQL Empty Collection Comparison Expressions

- 2.5.12. JPQL Collection Member Expressions

- 2.5.13. JPQL Exists Expressions

- 2.5.14. JPQL All or Any Expressions

- 2.5.15. JPQL Subqueries

- 2.5.16. JPQL Functional Expressions

- 2.6. JPQL GROUP BY, HAVING

- 2.7. JPQL SELECT Clause

- 2.8. JPQL ORDER BY Clause

- 2.9. JPQL Bulk Update and Delete

- 2.10. JPQL Null Values

- 2.11. JPQL Equality and Comparison Semantics

- 2.12. JPQL BNF

- 11. SQL Queries

- 12. Mapping Metadata

- 13. Conclusion

- 3. Reference Guide

- 1. Introduction

- 2. Configuration

- 1. Introduction

- 2. Runtime Configuration

- 3. Command Line Configuration

- 4. Plugin Configuration

- 5. OpenJPA Properties

- 5.1. openjpa.AutoClear

- 5.2. openjpa.AutoDetach

- 5.3. openjpa.BrokerFactory

- 5.4. openjpa.BrokerImpl

- 5.5. openjpa.ClassResolver

- 5.6. openjpa.Compatibility

- 5.7. openjpa.ConnectionDriverName

- 5.8. openjpa.Connection2DriverName

- 5.9. openjpa.ConnectionFactory

- 5.10. openjpa.ConnectionFactory2

- 5.11. openjpa.ConnectionFactoryName

- 5.12. openjpa.ConnectionFactory2Name

- 5.13. openjpa.ConnectionFactoryMode

- 5.14. openjpa.ConnectionFactoryProperties

- 5.15. openjpa.ConnectionFactory2Properties

- 5.16. openjpa.ConnectionPassword

- 5.17. openjpa.Connection2Password

- 5.18. openjpa.ConnectionProperties

- 5.19. openjpa.Connection2Properties

- 5.20. openjpa.ConnectionURL

- 5.21. openjpa.Connection2URL

- 5.22. openjpa.ConnectionUserName

- 5.23. openjpa.Connection2UserName

- 5.24. openjpa.ConnectionRetainMode

- 5.25. openjpa.DataCache

- 5.26. openjpa.DataCacheManager

- 5.27. openjpa.DataCacheTimeout

- 5.28. openjpa.DetachState

- 5.29. openjpa.DynamicDataStructs

- 5.30. openjpa.FetchBatchSize

- 5.31. openjpa.FetchGroups

- 5.32. openjpa.FlushBeforeQueries

- 5.33. openjpa.IgnoreChanges

- 5.34. openjpa.Id

- 5.35. openjpa.InverseManager

- 5.36. openjpa.LockManager

- 5.37. openjpa.LockTimeout

- 5.38. openjpa.Log

- 5.39. openjpa.ManagedRuntime

- 5.40. openjpa.Mapping

- 5.41. openjpa.MaxFetchDepth

- 5.42. openjpa.MetaDataFactory

- 5.43. openjpa.MetaDataRepository

- 5.44. openjpa.Multithreaded

- 5.45. openjpa.Optimistic

- 5.46. openjpa.OrphanedKeyAction

- 5.47. openjpa.NontransactionalRead

- 5.48. openjpa.NontransactionalWrite

- 5.49. openjpa.ProxyManager

- 5.50. openjpa.QueryCache

- 5.51. openjpa.QueryCompilationCache

- 5.52. openjpa.ReadLockLevel

- 5.53. openjpa.RemoteCommitProvider

- 5.54. openjpa.RestoreState

- 5.55. openjpa.RetainState

- 5.56. openjpa.RetryClassRegistration

- 5.57. openjpa.RuntimeUnenhancedClasses

- 5.58. openjpa.SavepointManager

- 5.59. openjpa.Sequence

- 5.60. openjpa.TransactionMode

- 5.61. openjpa.WriteLockLevel

- 6. OpenJPA JDBC Properties

- 6.1. openjpa.jdbc.ConnectionDecorators

- 6.2. openjpa.jdbc.DBDictionary

- 6.3. openjpa.jdbc.DriverDataSource

- 6.4. openjpa.jdbc.EagerFetchMode

- 6.5. openjpa.jdbc.FetchDirection

- 6.6. openjpa.jdbc.JDBCListeners

- 6.7. openjpa.jdbc.LRSSize

- 6.8. openjpa.jdbc.MappingDefaults

- 6.9. openjpa.jdbc.MappingFactory

- 6.10. openjpa.jdbc.QuerySQLCache

- 6.11. openjpa.jdbc.ResultSetType

- 6.12. openjpa.jdbc.Schema

- 6.13. openjpa.jdbc.SchemaFactory

- 6.14. openjpa.jdbc.Schemas

- 6.15. openjpa.jdbc.SQLFactory

- 6.16. openjpa.jdbc.SubclassFetchMode

- 6.17. openjpa.jdbc.SynchronizeMappings

- 6.18. openjpa.jdbc.TransactionIsolation

- 6.19. openjpa.jdbc.UpdateManager

- 3. Logging

- 4. JDBC

- 1. Using the OpenJPA DataSource

- 2. Using a Third-Party DataSource

- 3. Runtime Access to DataSource

- 4. Database Support

- 5. Setting the Transaction Isolation

- 6. Setting the SQL Join Syntax

- 7. Accessing Multiple Databases

- 8. Configuring the Use of JDBC Connections

- 9. Statement Batching

- 10. Large Result Sets

- 11. Default Schema

- 12. Schema Reflection

- 13. Schema Tool

- 14. XML Schema Format

- 5. Persistent Classes

- 6. Metadata

- 7. Mapping

- 1. Forward Mapping

- 2. Reverse Mapping

- 3. Meet-in-the-Middle Mapping

- 4. Mapping Defaults

- 5. Mapping Factory

- 6. Non-Standard Joins

- 7. Additional JPA Mappings

- 8. Key Columns

- 9. Key Join Columns

- 10. Key Embedded Mapping

- 11. Examples

- 12. Mapping Limitations

- 13. Mapping Extensions

- 14. Custom Mappings

- 15. Orphaned Keys

- 8. Deployment

- 9. Runtime Extensions

- 10. Caching

- 11. Remote and Offline Operation

- 12. Distributed Persistence

- 13. Third Party Integration

- 14. Optimization Guidelines

- 1. JPA Resources

- 2. Supported Databases

List of Tables

- 2.1. Persistence Mechanisms

- 10.1. Interaction of ReadLockMode hint and LockManager

- 4.1. OpenJPA Automatic Flush Behavior

- 5.1. Externalizer Options

- 5.2. Factory Options

- 10.1. Data access methods

- 10.2. Pre-defined aliases

- 10.3. Pre-defined aliases

- 14.1. Optimization Guidelines

- 2.1. Supported Databases and JDBC Drivers

List of Examples

- 3.1. Interaction of Interfaces Outside Container

- 3.2. Interaction of Interfaces Inside Container

- 4.1. Persistent Class

- 4.2. Identity Class

- 5.1. Class Metadata

- 5.2. Complete Metadata

- 6.1. persistence.xml

- 6.2. Obtaining an EntityManagerFactory

- 7.1. Behavior of Transaction Persistence Context

- 7.2. Behavior of Extended Persistence Context

- 8.1. Persisting Objects

- 8.2. Updating Objects

- 8.3. Removing Objects

- 8.4. Detaching and Merging

- 9.1. Grouping Operations with Transactions

- 10.1. Query Hints

- 10.2. Named Query using Hints

- 10.3. Delete by Query

- 10.4. Update by Query

- 11.1. Creating a SQL Query

- 11.2. Retrieving Persistent Objects

- 11.3. SQL Query Parameters

- 12.1. Mapping Classes

- 12.2. Defining a Unique Constraint

- 12.3. Identity Mapping

- 12.4. Generator Mapping

- 12.5. Single Table Mapping

- 12.6. Joined Subclass Tables

- 12.7. Table Per Class Mapping

- 12.8. Inheritance Mapping

- 12.9. Discriminator Mapping

- 12.10. Basic Field Mapping

- 12.11. Secondary Table Field Mapping

- 12.12. Embedded Field Mapping

- 12.13. Mapping Mapped Superclass Field

- 12.14. Direct Relation Field Mapping

- 12.15. Join Table Mapping

- 12.16. Join Table Map Mapping

- 12.17. Full Entity Mappings

- 2.1. Code Formatting with the Application Id Tool

- 3.1. Standard OpenJPA Log Configuration

- 3.2. Standard OpenJPA Log Configuration + All SQL Statements

- 3.3. Logging to a File

- 3.4. Standard Log4J Logging

- 3.5. JDK 1.4 Log Properties

- 3.6. Custom Logging Class

- 4.1. Properties for the OpenJPA DataSource

- 4.2. Properties File for a Third-Party DataSource

- 4.3. Managed DataSource Configuration

- 4.4. Using the EntityManager's Connection

- 4.5. Using the EntityManagerFactory's DataSource

- 4.6. Specifying a DBDictionary

- 4.7. Specifying a Transaction Isolation

- 4.8. Specifying the Join Syntax Default

- 4.9. Specifying the Join Syntax at Runtime

- 4.10. Specifying Connection Usage Defaults

- 4.11. Specifying Connection Usage at Runtime

- 4.12. Enable SQL statement batching

- 4.13. Disable SQL statement batching

- 4.14. Plug-in custom statement batching implementation

- 4.15. Specifying Result Set Defaults

- 4.16. Specifying Result Set Behavior at Runtime

- 4.17. Schema Creation

- 4.18. SQL Scripting

- 4.19. Table Cleanup

- 4.20. Schema Drop

- 4.21. Schema Reflection

- 4.22. Basic Schema

- 4.23. Full Schema

- 5.1. Using the OpenJPA Enhancer

- 5.2. Using the OpenJPA Agent for Runtime Enhancement

- 5.3. Passing Options to the OpenJPA Agent

- 5.4. JPA Datastore Identity Metadata

- 5.5. Finding an Entity with an Entity Identity Field

- 5.6. Id Class for Entity Identity Fields

- 5.7. Using the Application Identity Tool

- 5.8. Specifying Logical Inverses

- 5.9. Enabling Managed Inverses

- 5.10. Log Inconsistencies

- 5.11. Using Initial Field Values

- 5.12. Using a Large Result Set Iterator

- 5.13. Marking a Large Result Set Field

- 5.14. Configuring the Proxy Manager

- 5.15. Using Externalization

- 5.16. Querying Externalization Fields

- 5.17. Using External Values

- 5.18. Custom Fetch Group Metadata

- 5.19. Load Fetch Group Metadata

- 5.20. Using the FetchPlan

- 5.21. Adding an Eager Field

- 5.22. Setting the Default Eager Fetch Mode

- 5.23. Setting the Eager Fetch Mode at Runtime

- 6.1. Setting a Standard Metadata Factory

- 6.2. Setting a Custom Metadata Factory

- 6.3.

- 6.4. OpenJPA Metadata Extensions

- 7.1. Using the Mapping Tool

- 7.2. Creating the Relational Schema from Mappings

- 7.3. Refreshing entire schema and cleaning out tables

- 7.4. Dropping Mappings and Association Schema

- 7.5. Create DDL for Current Mappings

- 7.6. Create DDL to Update Database for Current Mappings

- 7.7. Configuring Runtime Forward Mapping

- 7.8. Reflection with the Schema Tool

- 7.9. Using the Reverse Mapping Tool

- 7.10. Customizing Reverse Mapping with Properties

- 7.11. Validating Mappings

- 7.12. Configuring Mapping Defaults

- 7.13. Standard JPA Configuration

- 7.14. Datastore Identity Mapping

- 7.15. Overriding Complex Mappings

- 7.16. One-Sided One-Many Mapping

- 7.17. myaddress.xsd

- 7.18. Address.Java

- 7.19. USAAddress.java

- 7.20. CANAddress.java

- 7.21. Showing annotated Order entity with XML mapping strategy

- 7.22. Showing creation of Order Entity having shipAddress mapped to XML column

- 7.23. Sample EJB Queries for XML Column mapping

- 7.24. Showing annotated InputStream

- 7.25. String Key, Entity Value Map Mapping

- 7.26. Custom Logging Orphaned Keys

- 8.1. Configuring Transaction Manager Integration

- 9.1. Evict from Data Cache

- 9.2. Using a JPA Extent

- 9.3. Setting Default Lock Levels

- 9.4. Setting Runtime Lock Levels

- 9.5. Locking APIs

- 9.6. Disabling Locking

- 9.7. Using Savepoints

- 9.8. Named Seq Sequence

- 9.9. System Sequence Configuration

- 10.1. Single-JVM Data Cache

- 10.2. Data Cache Size

- 10.3. Data Cache Timeout

- 10.4. Excluding entities

- 10.5. Including entities

- 10.6. Accessing the StoreCache

- 10.7. StoreCache Usage

- 10.8. Automatic Data Cache Eviction

- 10.9. Accessing the QueryResultCache

- 10.10. Query Cache Size

- 10.11. Disabling the Query Cache

- 10.12. Evicting Queries

- 10.13. Pinning, and Unpinning Query Results

- 10.14. Disabling and Enabling Query Caching

- 10.15. Query Replaces Extent

- 11.1. Configuring Detached State

- 11.2. JMS Remote Commit Provider Configuration

- 11.3. TCP Remote Commit Provider Configuration

- 11.4. JMS Remote Commit Provider transmitting Persisted Object Ids

- 13.1. Using the <config> Ant Tag

- 13.2. Using the Properties Attribute of the <config> Tag

- 13.3. Using the PropertiesFile Attribute of the <config> Tag

- 13.4. Using the <classpath> Ant Tag

- 13.5. Using the <codeformat> Ant Tag

- 13.6. Invoking the Enhancer from Ant

- 13.7. Invoking the Application Identity Tool from Ant

- 13.8. Invoking the Mapping Tool from Ant

- 13.9. Invoking the Reverse Mapping Tool from Ant

- 13.10. Invoking the Schema Tool from Ant

- 2.1. Example properties for Derby

- 2.2. Example properties for Interbase

- 2.3. Example properties for JDataStore

- 2.4. Example properties for IBM DB2

- 2.5. Example properties for Empress

- 2.6. Example properties for H2 Database Engine

- 2.7. Example properties for Hypersonic

- 2.8. Example properties for Firebird

- 2.9. Example properties for Informix Dynamic Server

- 2.10. Example properties for InterSystems Cache

- 2.11. Example properties for Microsoft Access

- 2.12. Example properties for Microsoft SQLServer

- 2.13. Example properties for Microsoft FoxPro

- 2.14. Example properties for MySQL

- 2.15. Example properties for Oracle

- 2.16. Using Oracle Hints

- 2.17. Example properties for Pointbase

- 2.18. Example properties for PostgreSQL

- 2.19. Example properties for Sybase

Table of Contents

Table of Contents

OpenJPA is Apache's implementation of Sun's Java Persistence API (JPA) specification for the transparent persistence of Java objects. This document provides an overview of the JPA standard and technical details on the use of OpenJPA.

This document is intended for OpenJPA users. It is divided into several parts:

The JPA Overview describes the fundamentals of the JPA specification.

The OpenJPA Reference Guide contains detailed documentation on all aspects of OpenJPA. Browse through this guide to familiarize yourself with the many advanced features and customization opportunities OpenJPA provides. Later, you can use the guide when you need details on a specific aspect of OpenJPA.

Table of Contents

- 1. Introduction

- 2. Why JPA?

- 3. Java Persistence API Architecture

- 4. Entity

- 5. Metadata

- 6. Persistence

- 7. EntityManagerFactory

- 8. EntityManager

- 9. Transaction

- 10. JPA Query

- 1. JPQL API

- 2. JPQL Language Reference

- 2.1. JPQL Statement Types

- 2.2. JPQL Abstract Schema Types and Query Domains

- 2.3. JPQL FROM Clause and Navigational Declarations

- 2.4. JPQL WHERE Clause

- 2.5. JPQL Conditional Expressions

- 2.5.1. JPQL Literals

- 2.5.2. JPQL Identification Variables

- 2.5.3. JPQL Path Expressions

- 2.5.4. JPQL Input Parameters

- 2.5.5. JPQL Conditional Expression Composition

- 2.5.6. JPQL Operators and Operator Precedence

- 2.5.7. JPQL Between Expressions

- 2.5.8. JPQL In Expressions

- 2.5.9. JPQL Like Expressions

- 2.5.10. JPQL Null Comparison Expressions

- 2.5.11. JPQL Empty Collection Comparison Expressions

- 2.5.12. JPQL Collection Member Expressions

- 2.5.13. JPQL Exists Expressions

- 2.5.14. JPQL All or Any Expressions

- 2.5.15. JPQL Subqueries

- 2.5.16. JPQL Functional Expressions

- 2.6. JPQL GROUP BY, HAVING

- 2.7. JPQL SELECT Clause

- 2.8. JPQL ORDER BY Clause

- 2.9. JPQL Bulk Update and Delete

- 2.10. JPQL Null Values

- 2.11. JPQL Equality and Comparison Semantics

- 2.12. JPQL BNF

- 11. SQL Queries

- 12. Mapping Metadata

- 13. Conclusion

Table of Contents

The Java Persistence API (JPA) is a specification from Sun Microsystems for the persistence of Java objects to any relational datastore. JPA requires J2SE 1.5 (also referred to as "Java 5") or higher, as it makes heavy use of new Java language features such as annotations and generics. This document provides an overview of JPA. Unless otherwise noted, the information presented applies to all JPA implementations.

Note

For coverage of OpenJPA's many extensions to the JPA specification, see the Reference Guide.

This document is intended for developers who want to learn about JPA in order to use it in their applications. It assumes that you have a strong knowledge of object-oriented concepts and Java, including Java 5 annotations and generics. It also assumes some experience with relational databases and the Structured Query Language (SQL).

Persistent data is information that can outlive the program that creates it. The majority of complex programs use persistent data: GUI applications need to store user preferences across program invocations, web applications track user movements and orders over long periods of time, etc.

Lightweight persistence is the storage and retrieval of persistent data with little or no work from you, the developer. For example, Java serialization is a form of lightweight persistence because it can be used to persist Java objects directly to a file with very little effort. Serialization's capabilities as a lightweight persistence mechanism pale in comparison to those provided by JPA, however. The next chapter compares JPA to serialization and other available persistence mechanisms.

Java developers who need to store and retrieve persistent data already have several options available to them: serialization, JDBC, JDO, proprietary object-relational mapping tools, object databases, and EJB 2 entity beans. Why introduce yet another persistence framework? The answer to this question is that with the exception of JDO, each of the aforementioned persistence solutions has severe limitations. JPA attempts to overcome these limitations, as illustrated by the table below.

Table 2.1. Persistence Mechanisms

| Supports: | Serialization | JDBC | ORM | ODB | EJB 2 | JDO | JPA |

|---|---|---|---|---|---|---|---|

| Java Objects | Yes | No | Yes | Yes | Yes | Yes | Yes |

| Advanced OO Concepts | Yes | No | Yes | Yes | No | Yes | Yes |

| Transactional Integrity | No | Yes | Yes | Yes | Yes | Yes | Yes |

| Concurrency | No | Yes | Yes | Yes | Yes | Yes | Yes |

| Large Data Sets | No | Yes | Yes | Yes | Yes | Yes | Yes |

| Existing Schema | No | Yes | Yes | No | Yes | Yes | Yes |

| Relational and Non-Relational Stores | No | No | No | No | Yes | Yes | No |

| Queries | No | Yes | Yes | Yes | Yes | Yes | Yes |

| Strict Standards / Portability | Yes | No | No | No | Yes | Yes | Yes |

| Simplicity | Yes | Yes | Yes | Yes | No | Yes | Yes |

Serialization is Java's built-in mechanism for transforming an object graph into a series of bytes, which can then be sent over the network or stored in a file. Serialization is very easy to use, but it is also very limited. It must store and retrieve the entire object graph at once, making it unsuitable for dealing with large amounts of data. It cannot undo changes that are made to objects if an error occurs while updating information, making it unsuitable for applications that require strict data integrity. Multiple threads or programs cannot read and write the same serialized data concurrently without conflicting with each other. It provides no query capabilities. All these factors make serialization useless for all but the most trivial persistence needs.

Many developers use the Java Database Connectivity (JDBC) APIs to manipulate persistent data in relational databases. JDBC overcomes most of the shortcomings of serialization: it can handle large amounts of data, has mechanisms to ensure data integrity, supports concurrent access to information, and has a sophisticated query language in SQL. Unfortunately, JDBC does not duplicate serialization's ease of use. The relational paradigm used by JDBC was not designed for storing objects, and therefore forces you to either abandon object-oriented programming for the portions of your code that deal with persistent data, or to find a way of mapping object-oriented concepts like inheritance to relational databases yourself.

There are many proprietary software products that can perform the mapping between objects and relational database tables for you. These object-relational mapping (ORM) frameworks allow you to focus on the object model and not concern yourself with the mismatch between the object-oriented and relational paradigms. Unfortunately, each of these product has its own set of APIs. Your code becomes tied to the proprietary interfaces of a single vendor. If the vendor raises prices, fails to fix show-stopping bugs, or falls behind in features, you cannot switch to another product without rewriting all of your persistence code. This is referred to as vendor lock-in.

Rather than map objects to relational databases, some software companies have developed a form of database designed specifically to store objects. These object databases (ODBs) are often much easier to use than object-relational mapping software. The Object Database Management Group (ODMG) was formed to create a standard API for accessing object databases; few object database vendors, however, comply with the ODMG's recommendations. Thus, vendor lock-in plagues object databases as well. Many companies are also hesitant to switch from tried-and-true relational systems to the relatively unknown object database technology. Fewer data-analysis tools are available for object database systems, and there are vast quantities of data already stored in older relational databases. For all of these reasons and more, object databases have not caught on as well as their creators hoped.

The Enterprise Edition of the Java platform introduced entity Enterprise Java Beans (EJBs). EJB 2.x entities are components that represent persistent information in a datastore. Like object-relational mapping solutions, EJB 2.x entities provide an object-oriented view of persistent data. Unlike object-relational software, however, EJB 2.x entities are not limited to relational databases; the persistent information they represent may come from an Enterprise Information System (EIS) or other storage device. Also, EJB 2.x entities use a strict standard, making them portable across vendors. Unfortunately, the EJB 2.x standard is somewhat limited in the object-oriented concepts it can represent. Advanced features like inheritance, polymorphism, and complex relations are absent. Additionally, EBJ 2.x entities are difficult to code, and they require heavyweight and often expensive application servers to run.

The JDO specification uses an API that is strikingly similar to JPA. JDO, however, supports non-relational databases, a feature that some argue dilutes the specification.

JPA combines the best features from each of the persistence mechanisms listed above. Creating entities under JPA is as simple as creating serializable classes. JPA supports the large data sets, data consistency, concurrent use, and query capabilities of JDBC. Like object-relational software and object databases, JPA allows the use of advanced object-oriented concepts such as inheritance. JPA avoids vendor lock-in by relying on a strict specification like JDO and EJB 2.x entities. JPA focuses on relational databases. And like JDO, JPA is extremely easy to use.

Note

OpenJPA typically stores data in relational databases, but can be customized for use with non-relational datastores as well.

JPA is not ideal for every application. For many applications, though, it provides an exciting alternative to other persistence mechanisms.

Table of Contents

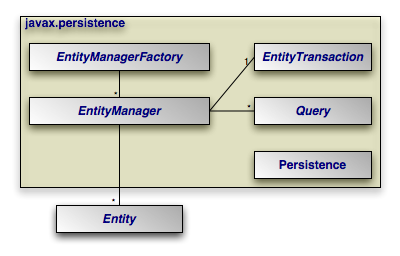

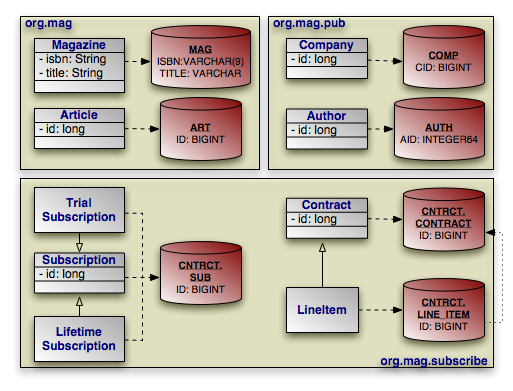

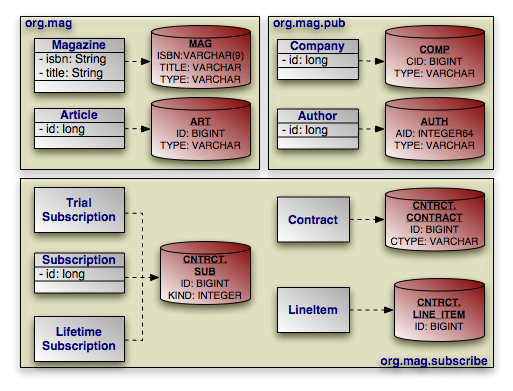

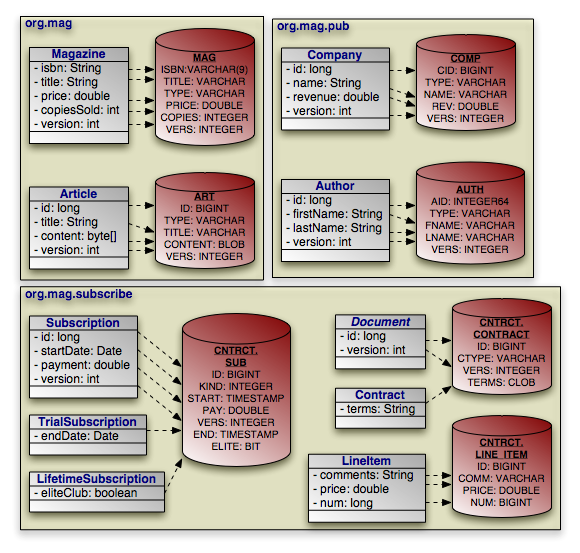

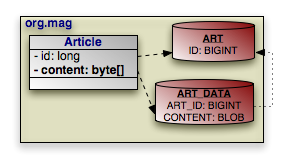

The diagram below illustrates the relationships between the primary components of the JPA architecture.

|

Note

A number of the depicted interfaces are only required outside of an

EJB3-compliant application server. In an application server,

EntityManager instances are typically injected, rendering the

EntityManagerFactory unnecessary. Also, transactions

within an application server are handled using standard application server

transaction controls. Thus, the EntityTransaction also

goes unused.

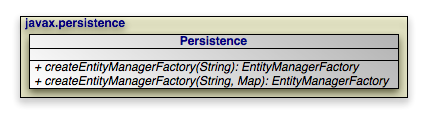

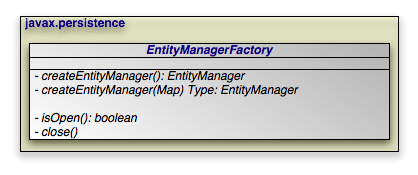

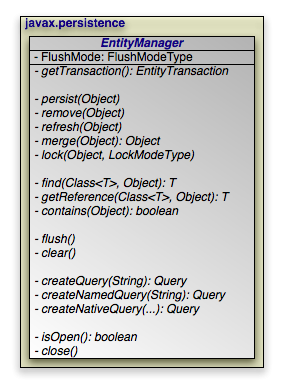

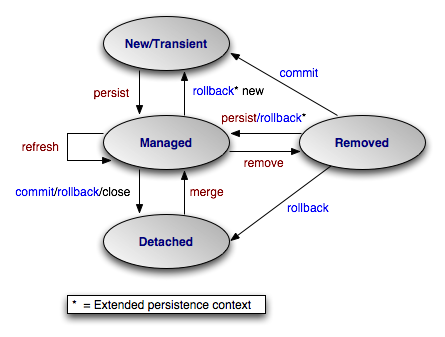

Persistence: Thejavax.persistence.Persistenceclass contains static helper methods to obtainEntityManagerFactoryinstances in a vendor-neutral fashion.EntityManagerFactory: Thejavax.persistence.EntityManagerFactoryclass is a factory forEntityManagers.EntityManager: Thejavax.persistence.EntityManageris the primary JPA interface used by applications. EachEntityManagermanages a set of persistent objects, and has APIs to insert new objects and delete existing ones. When used outside the container, there is a one-to-one relationship between anEntityManagerand anEntityTransaction.EntityManagers also act as factories forQueryinstances.Entity: Entites are persistent objects that represent datastore records.EntityTransaction: EachEntityManagerhas a one-to-one relation with a singlejavax.persistence.EntityTransaction.EntityTransactions allow operations on persistent data to be grouped into units of work that either completely succeed or completely fail, leaving the datastore in its original state. These all-or-nothing operations are important for maintaining data integrity.Query: Thejavax.persistence.Queryinterface is implemented by each JPA vendor to find persistent objects that meet certain criteria. JPA standardizes support for queries using both the Java Persistence Query Language (JPQL) and the Structured Query Language (SQL). You obtainQueryinstances from anEntityManager.

The example below illustrates how the JPA interfaces interact to execute a JPQL query and update persistent objects. The example assumes execution outside a container.

Example 3.1. Interaction of Interfaces Outside Container

// get an EntityManagerFactory using the Persistence class; typically

// the factory is cached for easy repeated use

EntityManagerFactory factory = Persistence.createEntityManagerFactory(null);

// get an EntityManager from the factory

EntityManager em = factory.createEntityManager(PersistenceContextType.EXTENDED);

// updates take place within transactions

EntityTransaction tx = em.getTransaction();

tx.begin();

// query for all employees who work in our research division

// and put in over 40 hours a week average

Query query = em.createQuery("select e from Employee e where "

+ "e.division.name = 'Research' AND e.avgHours > 40");

List results = query.getResultList ();

// give all those hard-working employees a raise

for (Object res : results) {

Employee emp = (Employee) res;

emp.setSalary(emp.getSalary() * 1.1);

}

// commit the updates and free resources

tx.commit();

em.close();

factory.close();

Within a container, the EntityManager will be injected

and transactions will be handled declaratively. Thus, the in-container version

of the example consists entirely of business logic:

Example 3.2. Interaction of Interfaces Inside Container

// query for all employees who work in our research division

// and put in over 40 hours a week average - note that the EntityManager em

// is injected using a @Resource annotation

Query query = em.createQuery("select e from Employee e where "

+ "e.division.name = 'Research' and e.avgHours > 40");

List results = query.getResultList();

// give all those hard-working employees a raise

for (Object res : results) {

emp = (Employee) res;

emp.setSalary(emp.getSalary() * 1.1);

}

The remainder of this document explores the JPA interfaces in detail. We present them in roughly the order that you will use them as you develop your application.

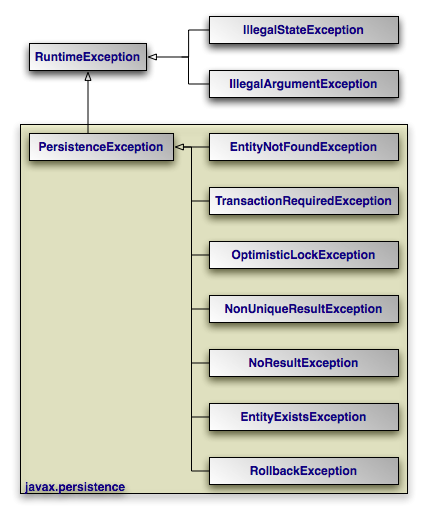

|

The diagram above depicts the JPA exception architecture. All

exceptions are unchecked. JPA uses standard exceptions where

appropriate, most notably IllegalArgumentExceptions and

IllegalStateExceptions. The specification also provides

a few JPA-specific exceptions in the javax.persistence

package. These exceptions should be self-explanatory. See the

Javadoc for

additional details on JPA exceptions.

Note

All exceptions thrown by OpenJPA implement

org.apache.openjpa.util.ExceptionInfo to provide you with

additional error information.

Table of Contents

JPA recognizes two types of persistent classes: entity

classes and embeddable classes. Each persistent instance of

an entity class - each entity - represents a unique

datastore record. You can use the EntityManager to find

an entity by its persistent identity (covered later in this chapter), or use a

Query to find entities matching certain criteria.

An instance of an embeddable class, on the other hand, is only stored as part of

a separate entity. Embeddable instances have no persistent identity, and are

never returned directly from the EntityManager or from a

Query unless the query uses a projection on owning class

to the embedded instance. For example, if Address is

embedded in Company, then

a query "SELECT a FROM Address a" will never return the

embedded Address of Company;

but a projection query such as

"SELECT c.address FROM Company c" will.

Despite these differences, there are few distinctions between entity classes and embeddable classes. In fact, writing either type of persistent class is a lot like writing any other class. There are no special parent classes to extend from, field types to use, or methods to write. This is one important way in which JPA makes persistence transparent to you, the developer.

Note

JPA supports both fields and JavaBean properties as persistent state. For simplicity, however, we will refer to all persistent state as persistent fields, unless we want to note a unique aspect of persistent properties.

Example 4.1. Persistent Class

package org.mag;

/**

* Example persistent class. Notice that it looks exactly like any other

* class. JPA makes writing persistent classes completely transparent.

*/

public class Magazine {

private String isbn;

private String title;

private Set articles = new HashSet();

private Article coverArticle;

private int copiesSold;

private double price;

private Company publisher;

private int version;

protected Magazine() {

}

public Magazine(String title, String isbn) {

this.title = title;

this.isbn = isbn;

}

public void publish(Company publisher, double price) {

this.publisher = publisher;

publisher.addMagazine(this);

this.price = price;

}

public void sell() {

copiesSold++;

publisher.addRevenue(price);

}

public void addArticle(Article article) {

articles.add(article);

}

// rest of methods omitted

}

There are very few restrictions placed on persistent classes. Still, it never hurts to familiarize yourself with exactly what JPA does and does not support.

The JPA specification requires that all persistent classes have a no-arg constructor. This constructor may be public or protected. Because the compiler automatically creates a default no-arg constructor when no other constructor is defined, only classes that define constructors must also include a no-arg constructor.

Note

OpenJPA's enhancer will automatically add a protected no-arg constructor to your class when required. Therefore, this restriction does not apply when using the enhancer. See Section 2, “ Enhancement ” of the Reference Guide for details.

Entity classes may not be final. No method of an entity class can be final.

Note

OpenJPA supports final classes and final methods.

All entity classes must declare one or more fields which together form the

persistent identity of an instance. These are called identity

or primary key fields. In our

Magazine class, isbn and title

are identity fields, because no two magazine records in the datastore can have

the same isbn and title values.

Section 2.2, “

Id

” will show you how to denote your

identity fields in JPA metadata. Section 2, “

Entity Identity

”

below examines persistent identity.

Note

OpenJPA fully supports identity fields, but does not require them. See Section 4, “ Object Identity ” of the Reference Guide for details.

The version field in our Magazine

class may seem out of place. JPA uses a version field in your entities to detect

concurrent modifications to the same datastore record. When the JPA runtime

detects an attempt to concurrently modify the same record, it throws an

exception to the transaction attempting to commit last. This prevents

overwriting the previous commit with stale data.

A version field is not required, but without one concurrent threads or processes might succeed in making conflicting changes to the same record at the same time. This is unacceptable to most applications. Section 2.5, “ Version ” shows you how to designate a version field in JPA metadata.

The version field must be an integral type ( int,

Long, etc) or a

java.sql.Timestamp. You should consider version fields immutable.

Changing the field value has undefined results.

Note

OpenJPA fully supports version fields, but does not require them for concurrency detection. OpenJPA can maintain surrogate version values or use state comparisons to detect concurrent modifications. See Section 7, “ Additional JPA Mappings ” in the Reference Guide.

JPA fully supports inheritance in persistent classes. It allows persistent classes to inherit from non-persistent classes, persistent classes to inherit from other persistent classes, and non-persistent classes to inherit from persistent classes. It is even possible to form inheritance hierarchies in which persistence skips generations. There are, however, a few important limitations:

Persistent classes cannot inherit from certain natively-implemented system classes such as

java.net.Socketandjava.lang.Thread.If a persistent class inherits from a non-persistent class, the fields of the non-persistent superclass cannot be persisted.

All classes in an inheritance tree must use the same identity type. We cover entity identity in Section 2, “ Entity Identity ”.

JPA manages the state of all persistent fields. Before you access persistent state, the JPA runtime makes sure that it has been loaded from the datastore. When you set a field, the runtime records that it has changed so that the new value will be persisted. This allows you to treat the field in exactly the same way you treat any other field - another aspect of JPA's transparency.

JPA does not support static or final fields. It does, however, include built-in support for most common field types. These types can be roughly divided into three categories: immutable types, mutable types, and relations.

Immutable types, once created, cannot be changed. The only way to alter a persistent field of an immutable type is to assign a new value to the field. JPA supports the following immutable types:

All primitives (

int, float, byte, etc)All primitive wrappers (

java.lang.Integer, java.lang.Float, java.lang.Byte, etc)java.lang.Stringjava.math.BigIntegerjava.math.BigDecimal

JPA also supports byte[], Byte[],

char[], and Character[] as

immutable types. That is, you can persist fields of these types,

but you should not manipulate individual array indexes without resetting the

array into the persistent field.

Persistent fields of mutable types can be altered without assigning the field a new value. Mutable types can be modified directly through their own methods. The JPA specification requires that implementations support the following mutable field types:

java.util.Datejava.util.Calendarjava.sql.Datejava.sql.Timestampjava.sql.TimeEnums

Entity types (relations between entities)

Embeddable types

java.util.Collections of entitiesjava.util.Sets of entitiesjava.util.Lists of entitiesjava.util.Maps in which each entry maps the value of one of a related entity's fields to that entity.

Collection and map types may be parameterized.

Most JPA implementations also have support for persisting serializable values as binary data in the datastore. Chapter 5, Metadata has more information on persisting serializable types.

Note

OpenJPA also supports arrays, java.lang.Number,

java.util.Locale, all JDK 1.2 Set,

List, and Map types,

and many other mutable and immutable field types. OpenJPA also allows you to

plug in support for custom types.

This section detailed all of the restrictions JPA places on persistent classes. While it may seem like we presented a lot of information, you will seldom find yourself hindered by these restrictions in practice. Additionally, there are often ways of using JPA's other features to circumvent any limitations you run into.

Java recognizes two forms of object identity: numeric identity and qualitative

identity. If two references are numerically identical, then

they refer to the same JVM instance in memory. You can test for this using the

== operator. Qualitative identity, on

the other hand, relies on some user-defined criteria to determine whether two

objects are "equal". You test for qualitative identity using the

equals method. By default, this method simply relies on numeric

identity.

JPA introduces another form of object identity, called entity identity or persistent identity. Entity identity tests whether two persistent objects represent the same state in the datastore.

The entity identity of each persistent instance is encapsulated in its identity field(s). If two entities of the same type have the same identity field values, then the two entities represent the same state in the datastore. Each entity's identity field values must be unique among all other entites of the same type.

Identity fields must be primitives, primitive wrappers,

Strings, Dates,

Timestamps, or embeddable types.

Note

OpenJPA supports entities as identity fields, as the Reference Guide discusses

in Section 4.2, “

Entities as Identity Fields

”. For legacy schemas with binary

primary key columns, OpenJPA also supports using identity fields of type

byte[]. When you use a byte[]

identity field, you must create an identity class. Identity classes are

covered below.

Warning

Changing the fields of an embeddable instance while it is assigned to an identity field has undefined results. Always treat embeddable identity instances as immutable objects in your applications.

If you are dealing with a single persistence context (see

Section 3, “

Persistence Context

”), then you do not

have to compare identity fields to test whether two entity references represent

the same state in the datastore. There is a much easier way: the ==

operator. JPA requires that each persistence context maintain only

one JVM object to represent each unique datastore record. Thus, entity identity

is equivalent to numeric identity within a persistence context. This is referred

to as the uniqueness requirement.

The uniqueness requirement is extremely important - without it, it would be impossible to maintain data integrity. Think of what could happen if two different objects in the same transaction were allowed to represent the same persistent data. If you made different modifications to each of these objects, which set of changes should be written to the datastore? How would your application logic handle seeing two different "versions" of the same data? Thanks to the uniqueness requirement, these questions do not have to be answered.

If your entity has only one identity field, you can use the value of that field

as the entity's identity object in all

EntityManager APIs. Otherwise, you must supply an

identity class to use for identity objects. Your identity class must meet the

following criteria:

The class must be public.

The class must be serializable.

The class must have a public no-args constructor.

The names of the non-static fields or properties of the class must be the same as the names of the identity fields or properties of the corresponding entity class, and the types must be identical.

The

equalsandhashCodemethods of the class must use the values of all fields or properties corresponding to identity fields or properties in the entity class.If the class is an inner class, it must be

static.All entity classes related by inheritance must use the same identity class, or else each entity class must have its own identity class whose inheritance hierarchy mirrors the inheritance hierarchy of the owning entity classes (see Section 2.1.1, “ Identity Hierarchies ”).

Note

Though you may still create identity classes by hand, OpenJPA provides the

appidtool to automatically generate proper identity

classes based on your identity fields. See

Section 4.3, “

Application Identity Tool

” of the Reference Guide.

Example 4.2. Identity Class

This example illustrates a proper identity class for an entity with multiple identity fields.

/**

* Persistent class using application identity.

*/

public class Magazine {

private String isbn; // identity field

private String title; // identity field

// rest of fields and methods omitted

/**

* Application identity class for Magazine.

*/

public static class MagazineId {

// each identity field in the Magazine class must have a

// corresponding field in the identity class

public String isbn;

public String title;

/**

* Equality must be implemented in terms of identity field

* equality, and must use instanceof rather than comparing

* classes directly (some JPA implementations may subclass the

* identity class).

*/

public boolean equals(Object other) {

if (other == this)

return true;

if (!(other instanceof MagazineId))

return false;

MagazineId mi = (MagazineId) other;

return (isbn == mi.isbn

|| (isbn != null && isbn.equals(mi.isbn)))

&& (title == mi.title

|| (title != null && title.equals(mi.title)));

}

/**

* Hashcode must also depend on identity values.

*/

public int hashCode() {

return ((isbn == null) ? 0 : isbn.hashCode())

^ ((title == null) ? 0 : title.hashCode());

}

public String toString() {

return isbn + ":" + title;

}

}

}

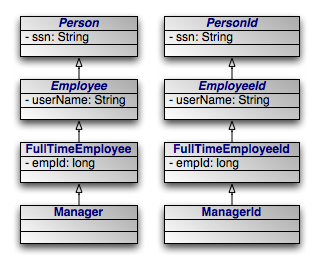

|

An alternative to having a single identity class for an entire inheritance hierarchy is to have one identity class per level in the inheritance hierarchy. The requirements for using a hierarchy of identity classes are as follows:

The inheritance hierarchy of identity classes must exactly mirror the hierarchy of the persistent classes that they identify. In the example pictured above, abstract class

Personis extended by abstract classEmployee, which is extended by non-abstract classFullTimeEmployee, which is extended by non-abstract classManager. The corresponding identity classes, then, are an abstractPersonIdclass, extended by an abstractEmployeeIdclass, extended by a non-abstractFullTimeEmployeeIdclass, extended by a non-abstractManagerIdclass.Subclasses in the identity hierarchy may define additional identity fields until the hierarchy becomes non-abstract. In the aforementioned example,

Persondefines an identity fieldssn,Employeedefines additional identity fielduserName, andFullTimeEmployeeadds a final identity field,empId. However,Managermay not define any additional identity fields, since it is a subclass of a non-abstract class. The hierarchy of identity classes, of course, must match the identity field definitions of the persistent class hierarchy.It is not necessary for each abstract class to declare identity fields. In the previous example, the abstract

PersonandEmployeeclasses could declare no identity fields, and the first concrete subclassFullTimeEmployeecould define one or more identity fields.All subclasses of a concrete identity class must be

equalsandhashCode-compatible with the concrete superclass. This means that in our example, aManagerIdinstance and aFullTimeEmployeeIdinstance with the same identity field values should have the same hash code, and should compare equal to each other using theequalsmethod of either one. In practice, this requirement reduces to the following coding practices:Use

instanceofinstead of comparingClassobjects in theequalsmethods of your identity classes.An identity class that extends another non-abstract identity class should not override

equalsorhashCode.

It is often necessary to perform various actions at different stages of a persistent object's lifecycle. JPA includes a variety of callbacks methods for monitoring changes in the lifecycle of your persistent objects. These callbacks can be defined on the persistent classes themselves and on non-persistent listener classes.

Every persistence event has a corresponding callback method marker. These markers are shared between persistent classes and their listeners. You can use these markers to designate a method for callback either by annotating that method or by listing the method in the XML mapping file for a given class. The lifecycle events and their corresponding method markers are:

PrePersist: Methods marked with this annotation will be invoked before an object is persisted. This could be used for assigning primary key values to persistent objects. This is equivalent to the XML element tagpre-persist.PostPersist: Methods marked with this annotation will be invoked after an object has transitioned to the persistent state. You might want to use such methods to update a screen after a new row is added. This is equivalent to the XML element tagpost-persist.PostLoad: Methods marked with this annotation will be invoked after all eagerly fetched fields of your class have been loaded from the datastore. No other persistent fields can be accessed in this method. This is equivalent to the XML element tagpost-load.PostLoadis often used to initialize non-persistent fields whose values depend on the values of persistent fields, such as a complex datastructure.PreUpdate: Methods marked with this annotation will be invoked just the persistent values in your objects are flushed to the datastore. This is equivalent to the XML element tagpre-update.PreUpdateis the complement toPostLoad. While methods marked withPostLoadare most often used to initialize non-persistent values from persistent data, methods annotated withPreUpdateis normally used to set persistent fields with information cached in non-persistent data.PostUpdate: Methods marked with this annotation will be invoked after changes to a given instance have been stored to the datastore. This is useful for clearing stale data cached at the application layer. This is equivalent to the XML element tagpost-update.PreRemove: Methods marked with this annotation will be invoked before an object transactions to the deleted state. Access to persistent fields is valid within this method. You might use this method to cascade the deletion to related objects based on complex criteria, or to perform other cleanup. This is equivalent to the XML element tagpre-remove.PostRemove: Methods marked with this annotation will be invoked after an object has been marked as to be deleted. This is equivalent to the XML element tagpost-remove.

When declaring callback methods on a persistent class, any method may be used which takes no arguments and is not shared with any property access fields. Multiple events can be assigned to a single method as well.

Below is an example of how to declare callback methods on persistent classes:

/**

* Example persistent class declaring our entity listener.

*/

@Entity

public class Magazine {

@Transient

private byte[][] data;

@ManyToMany

private List<Photo> photos;

@PostLoad

public void convertPhotos() {

data = new byte[photos.size()][];

for (int i = 0; i < photos.size(); i++)

data[i] = photos.get(i).toByteArray();

}

@PreDelete

public void logMagazineDeletion() {

getLog().debug("deleting magazine containing" + photos.size()

+ " photos.");

}

}

In an XML mapping file, we can define the same methods without annotations:

<entity class="Magazine">

<pre-remove>logMagazineDeletion</pre-remove>

<post-load>convertPhotos</post-load>

</entity>

Note

We fully explore persistence metadata annotations and XML in Chapter 5, Metadata .

Mixing lifecycle event code into your persistent classes is not always ideal. It

is often more elegant to handle cross-cutting lifecycle events in a

non-persistent listener class. JPA allows for this, requiring only that listener

classes have a public no-arg constructor. Like persistent classes, your listener

classes can consume any number of callbacks. The callback methods must take in a

single java.lang.Object argument which represents the

persistent object that triggered the event.

Entities can enumerate listeners using the EntityListeners

annotation. This annotation takes an array of listener classes as

its value.

Below is an example of how to declare an entity and its corresponding listener classes.

/**

* Example persistent class declaring our entity listener.

*/

@Entity

@EntityListeners({ MagazineLogger.class, ... })

public class Magazine {

// ... //

}

/**

* Example entity listener.

*/

public class MagazineLogger {

@PostPersist

public void logAddition(Object pc) {

getLog ().debug ("Added new magazine:" + ((Magazine) pc).getTitle ());

}

@PreRemove

public void logDeletion(Object pc) {

getLog().debug("Removing from circulation:" +

((Magazine) pc).getTitle());

}

}

In XML, we define both the listeners and their callback methods as so:

<entity class="Magazine">

<entity-listeners>

<entity-listener class="MagazineLogger">

<post-persist>logAddition</post-persist>

<pre-remove>logDeletion</pre-remove>

</entity-listener>

</entity-listeners>

</entity>

Entity listener methods are invoked in a specific order when a given event is fired. So-called default listeners are invoked first: these are listeners which have been defined in a package annotation or in the root element of XML mapping files. Next, entity listeners are invoked in the order of the inheritance hierarchy, with superclass listeners being invoked before subclass listeners. Finally, if an entity has multiple listeners for the same event, the listeners are invoked in declaration order.

You can exclude default listeners and listeners defined in superclasses from the invocation chain through the use of two class-level annotations:

ExcludeDefaultListeners: This annotation indicates that no default listeners will be invoked for this class, or any of its subclasses. The XML equivalent is the emptyexclude-default-listenerselement.ExcludeSuperclassListeners: This annotation will cause OpenJPA to skip invoking any listeners declared in superclasses. The XML equivalent is the emptyexclude-superclass-listenerselement.

Table of Contents

JPA requires that you accompany each persistent class with persistence metadata. This metadata serves three primary purposes:

To identify persistent classes.

To override default JPA behavior.

To provide the JPA implementation with information that it cannot glean from simply reflecting on the persistent class.

Persistence metadata is specified using either the Java 5 annotations defined in

the javax.persistence package, XML mapping files, or a

mixture of both. In the latter case, XML declarations override conflicting

annotations. If you choose to use XML metadata, the XML files must be available

at development and runtime, and must be discoverable via either of two

strategies:

In a resource named

orm.xmlplaced in aMETA-INFdirectory within a directory in your classpath or within a jar archive containing your persistent classes.Declared in your

persistence.xmlconfiguration file. In this case, each XML metadata file must be listed in amapping-fileelement whose content is either a path to the given file or a resource location available to the class' class loader.

We describe the standard metadata annotations and XML equivalents throughout this chapter. The full schema for XML mapping files is available in Section 3, “ XML Schema ”. JPA also standardizes relational mapping metadata and named query metadata, which we discuss in Chapter 12, Mapping Metadata and Section 1.10, “ Named Queries ” respectively.

Note

OpenJPA defines many useful annotations beyond the standard set. See Section 3, “ Additional JPA Metadata ” and Section 4, “ Metadata Extensions ” in the Reference Guide for details. There are currently no XML equivalents for these extension annotations.

Note

Persistence metadata may be used to validate the contents of your entities prior to communicating with the database. This differs from mapping meta data which is primarily used for schema generation. For example if you indicate that a relationship is not optional (e.g. @Basic(optional=false)) OpenJPA will validate that the variable in your entity is not null before inserting a row in the database.

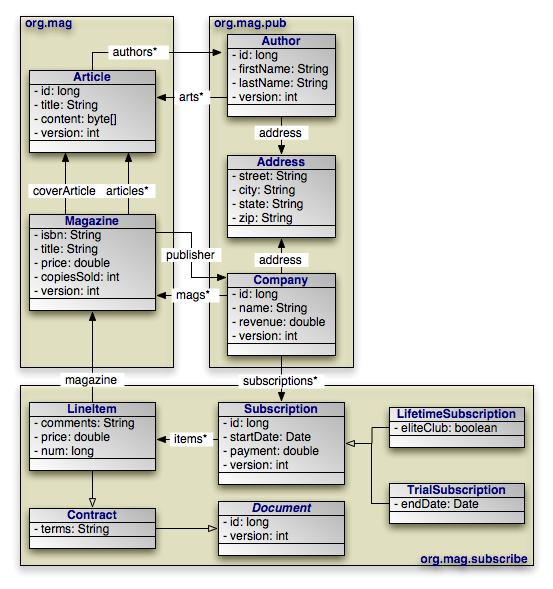

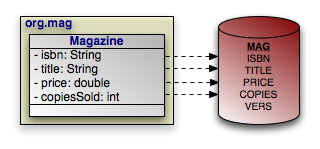

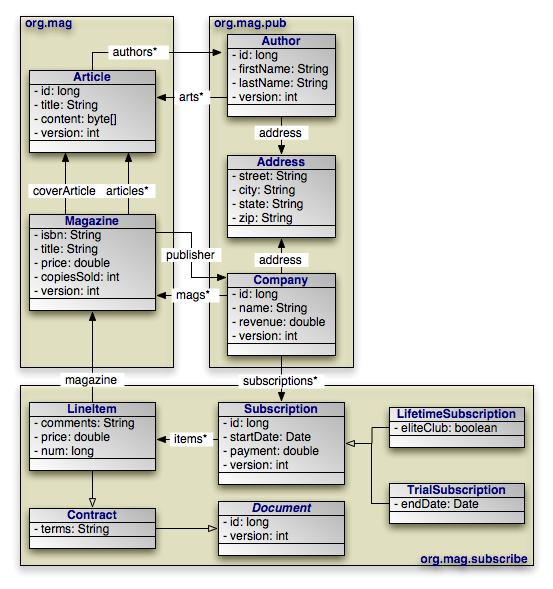

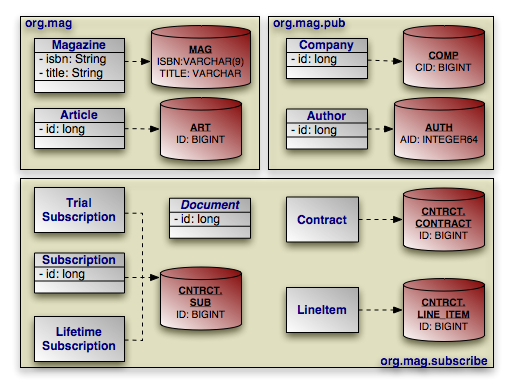

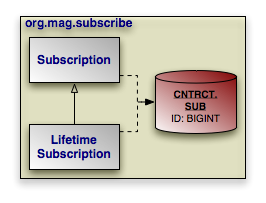

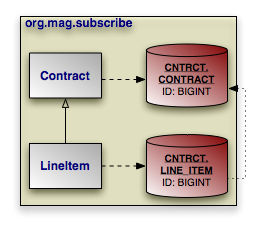

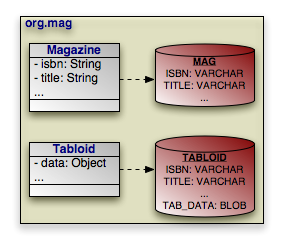

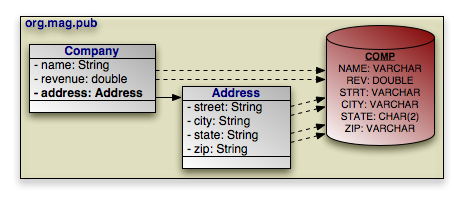

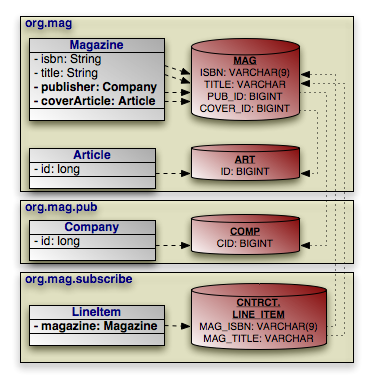

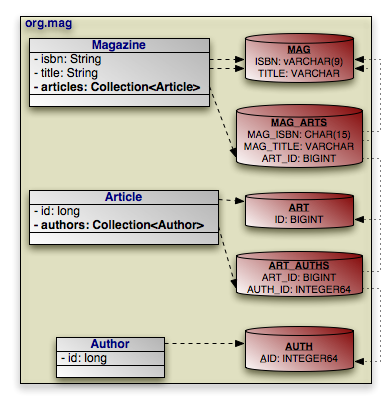

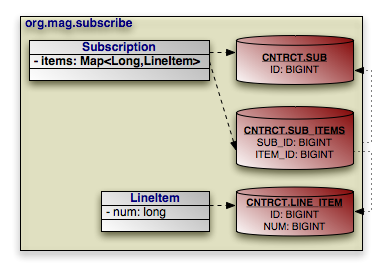

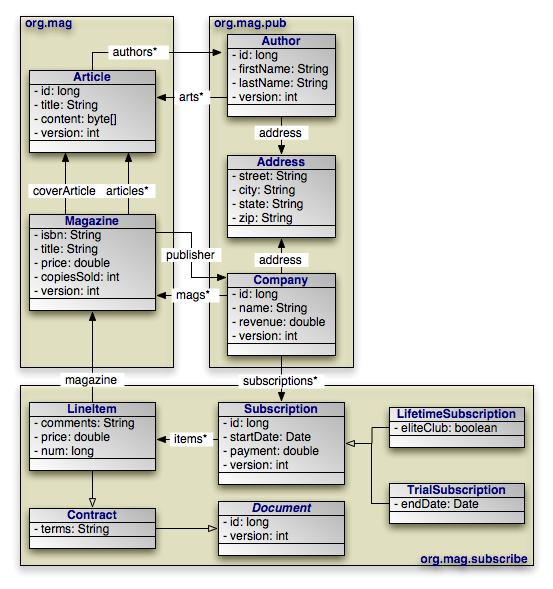

|

Through the course of this chapter, we will create the persistent object model above.

The following metadata annotations and XML elements apply to persistent class declarations.

The Entity annotation denotes an entity class. All entity

classes must have this annotation. The Entity annotation

takes one optional property:

String name: Name used to refer to the entity in queries. Must not be a reserved literal in JPQL. Defaults to the unqualified name of the entity class.

The equivalent XML element is entity. It has the following

attributes:

class: The entity class. This attribute is required.name: Named used to refer to the class in queries. See the name property above.access: The access type to use for the class. Must either beFIELDorPROPERTY. For details on access types, see Section 2, “ Field and Property Metadata ”.

Note

OpenJPA uses a process called enhancement to modify the bytecode of entities for transparent lazy loading and immediate dirty tracking. See Section 2, “ Enhancement ” in the Reference Guide for details on enhancement.

As we discussed in Section 2.1, “

Identity Class

”,

entities with multiple identity fields must use an identity class

to encapsulate their persistent identity. The IdClass

annotation specifies this class. It accepts a single

java.lang.Class value.

The equivalent XML element is id-class, which has a single

attribute:

class: Set this required attribute to the name of the identity class.

A mapped superclass is a non-entity class that can define

persistent state and mapping information for entity subclasses. Mapped

superclasses are usually abstract. Unlike true entities, you cannot query a

mapped superclass, pass a mapped superclass instance to any

EntityManager or Query methods, or declare a

persistent relation with a mapped superclass target. You denote a mapped

superclass with the MappedSuperclass marker annotation.

The equivalent XML element is mapped-superclass. It expects

the following attributes:

class: The entity class. This attribute is required.access: The access type to use for the class. Must either beFIELDorPROPERTY. For details on access types, see Section 2, “ Field and Property Metadata ”.

Note

OpenJPA allows you to query on mapped superclasses. A query on a mapped superclass will return all matching subclass instances. OpenJPA also allows you to declare relations to mapped superclass types; however, you cannot query across these relations.

The Embeddable annotation designates an embeddable

persistent class. Embeddable instances are stored as part of the record of their

owning instance. All embeddable classes must have this annotation.

A persistent class can either be an entity or an embeddable class, but not both.

The equivalent XML element is embeddable. It understands the

following attributes:

class: The entity class. This attribute is required.access: The access type to use for the class. Must either beFIELDorPROPERTY. For details on access types, see Section 2, “ Field and Property Metadata ”.

Note

OpenJPA allows a persistent class to be both an entity and an embeddable class. Instances of the class will act as entites when persisted explicitly or assigned to non-embedded fields of entities. Instances will act as embedded values when assigned to embedded fields of entities.

To signal that a class is both an entity and an embeddable class in OpenJPA,

simply add both the @Entity and the @Embeddable

annotations to the class.

An entity may list its lifecycle event listeners in the

EntityListeners annotation. This value of this annotation is an

array of the listener Class es for the entity. The

equivalent XML element is entity-listeners. For more details

on entity listeners, see Section 3, “

Lifecycle Callbacks

”.

Here are the class declarations for our persistent object model, annotated with

the appropriate persistence metadata. Note that Magazine

declares an identity class, and that Document and

Address are a mapped superclass and an embeddable class,

respectively. LifetimeSubscription and

TrialSubscription override the default entity name to supply a

shorter alias for use in queries.

Example 5.1. Class Metadata

package org.mag;

@Entity

@IdClass(Magazine.MagazineId.class)

public class Magazine {

...

public static class MagazineId {

...

}

}

@Entity

public class Article {

...

}

package org.mag.pub;

@Entity

public class Company {

...

}

@Entity

public class Author {

...

}

@Embeddable

public class Address {

...

}

package org.mag.subscribe;

@MappedSuperclass

public abstract class Document {

...

}

@Entity

public class Contract

extends Document {

...

}

@Entity

public class Subscription {

...

@Entity

public static class LineItem

extends Contract {

...

}

}

@Entity(name="Lifetime")

public class LifetimeSubscription

extends Subscription {

...

}

@Entity(name="Trial")

public class TrialSubscription

extends Subscription {

...

}

The equivalent declarations in XML:

<entity-mappings xmlns="http://java.sun.com/xml/ns/persistence/orm"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/persistence/orm orm_1_0.xsd"

version="1.0">

<mapped-superclass class="org.mag.subscribe.Document">

...

</mapped-superclass>

<entity class="org.mag.Magazine">

<id-class class="org.mag.Magazine$MagazineId"/>

...

</entity>

<entity class="org.mag.Article">

...

</entity>

<entity class="org.mag.pub.Company">

...

</entity>

<entity class="org.mag.pub.Author">

...

</entity>

<entity class="org.mag.subscribe.Contract">

...

</entity>

<entity class="org.mag.subscribe.LineItem">

...

</entity>

<entity class="org.mag.subscribe.LifetimeSubscription" name="Lifetime">

...

</entity>

<entity class="org.mag.subscribe.TrialSubscription" name="Trial">

...

</entity>

<embeddable class="org.mag.pub.Address">

...

</embeddable>

</entity-mappings>

The persistence implementation must be able to retrieve and set the persistent

state of your entities, mapped superclasses, and embeddable types. JPA offers

two modes of persistent state access: field access, and

property access. Under field access, the implementation

injects state directly into your persistent fields, and retrieves changed state

from your fields as well. To declare field access on an entity with XML

metadata, set the access attribute of your entity

XML element to FIELD. To use field access for an

entity using annotation metadata, simply place your metadata and mapping

annotations on your field declarations:

@ManyToOne private Company publisher;

Property access, on the other hand, retrieves and loads state through JavaBean

"getter" and "setter" methods. For a property p of type

T, you must define the following getter method:

T getP();

For boolean properties, this is also acceptable:

boolean isP();

You must also define the following setter method:

void setP(T value);

To use property access, set your entity element's

access attribute to PROPERTY, or place your

metadata and mapping annotations on the getter method:

@ManyToOne

private Company getPublisher() { ... }

private void setPublisher(Company publisher) { ... }

Warning

When using property access, only the getter and setter method for a property should ever access the underlying persistent field directly. Other methods, including internal business methods in the persistent class, should go through the getter and setter methods when manipulating persistent state.

Also, take care when adding business logic to your getter and setter methods. Consider that they are invoked by the persistence implementation to load and retrieve all persistent state; other side effects might not be desirable.

Each class must use either field access or property access for all state; you cannot use both access types within the same class. Additionally, a subclass must use the same access type as its superclass.

The remainder of this document uses the term "persistent field" to refer to either a persistent field or a persistent property.

The Transient annotation specifies that a field is

non-persistent. Use it to exclude fields from management that would otherwise be

persistent. Transient is a marker annotation only; it

has no properties.

The equivalent XML element is transient. It has a single

attribute:

name: The transient field or property name. This attribute is required.

Annotate your simple identity fields with Id. This

annotation has no properties. We explore entity identity and identity fields in

Section 1.3, “

Identity Fields

”.

The equivalent XML element is id. It has one required

attribute:

name: The name of the identity field or property.

The previous section showed you how to declare your identity fields with the

Id annotation. It is often convenient to allow the

persistence implementation to assign a unique value to your identity fields

automatically. JPA includes the GeneratedValue

annotation for this purpose. It has the following properties:

GenerationType strategy: Enum value specifying how to auto-generate the field value. TheGenerationTypeenum has the following values:GeneratorType.AUTO: The default. Assign the field a generated value, leaving the details to the JPA vendor.GenerationType.IDENTITY: The database will assign an identity value on insert.GenerationType.SEQUENCE: Use a datastore sequence to generate a field value.GenerationType.TABLE: Use a sequence table to generate a field value.

String generator: The name of a generator defined in mapping metadata. We show you how to define named generators in Section 5, “ Generators ”. If theGenerationTypeis set but this property is unset, the JPA implementation uses appropriate defaults for the selected generation type.

The equivalent XML element is generated-value, which

includes the following attributes:

strategy: One ofTABLE,SEQUENCE,IDENTITY, orAUTO, defaulting toAUTO.generator: Equivalent to the generator property listed above.

Note

OpenJPA allows you to use the GeneratedValue annotation

on any field, not just identity fields. Before using the IDENTITY

generation strategy, however, read

Section 4.4, “

Autoassign / Identity Strategy Caveats

” in the Reference Guide.

OpenJPA also offers additional generator strategies for non-numeric fields,

which you can access by setting strategy to AUTO

(the default), and setting the generator string

to:

uuid-string: OpenJPA will generate a 128-bit type 1 UUID unique within the network, represented as a 16-character string. For more information on UUIDs, see the IETF UUID draft specification at: http://www1.ics.uci.edu/~ejw/authoring/uuid-guid/uuid-hex: Same asuuid-string, but represents the type 1 UUID as a 32-character hexadecimal string.uuid-type4-string: OpenJPA will generate a 128-bit type 4 pseudo-random UUID, represented as a 16-character string. For more information on UUIDs, see the IETF UUID draft specification at: http://www1.ics.uci.edu/~ejw/authoring/uuid-guid/uuid-type4-hex: Same asuuid-type4-string, but represents the type 4 UUID as a 32-character hexadecimal string.

These string constants are defined in

org.apache.openjpa.persistence.Generator.

If the entities are mapped to the same table name but with different schema

name within one PersistenceUnit intentionally, and the

strategy of GeneratedType.AUTO is used to generate the ID

for each entity, a schema name for each entity must be explicitly declared

either through the annotation or the mapping.xml file. Otherwise, the mapping

tool only creates the tables for those entities with the schema names under

each schema. In addition, there will be only one

OPENJPA_SEQUENCE_TABLE created for all the entities within

the PersistenceUnit if the entities are not identified

with the schema name. Read Section 6, “

Generators

” and

Section 11, “

Default Schema

” in the Reference Guide.

If your entity has multiple identity values, you may declare multiple

@Id fields, or you may declare a single @EmbeddedId

field. The type of a field annotated with EmbeddedId must

be an embeddable entity class. The fields of this embeddable class are

considered the identity values of the owning entity. We explore entity identity

and identity fields in Section 1.3, “

Identity Fields

”.

The EmbeddedId annotation has no properties.

The equivalent XML element is embedded-id. It has one

required attribute:

name: The name of the identity field or property.

Use the Version annotation to designate a version field.

Section 1.4, “

Version Field

” explained the importance of

version fields to JPA. This is a marker annotation; it has no properties.

The equivalent XML element is version, which has a single

attribute:

name: The name of the version field or property. This attribute is required.

Basic signifies a standard value persisted as-is to the

datastore. You can use the Basic annotation on persistent

fields of the following types: primitives, primitive wrappers,

java.lang.String, byte[],

Byte[], char[],

Character[], java.math.BigDecimal,

java.math.BigInteger,

java.util.Date, java.util.Calendar,

java.sql.Date, java.sql.Timestamp,

Enums, and Serializable types.

Basic declares these properties:

FetchType fetch: Whether to load the field eagerly (FetchType.EAGER) or lazily (FetchType.LAZY). Defaults toFetchType.EAGER.boolean optional: Whether the datastore allows null values. Defaults to true.

The equivalent XML element is basic. It has the following

attributes:

name: The name of the field or property. This attribute is required.fetch: One ofEAGERorLAZY.optional: Boolean indicating whether the field value may be null.

Many metadata annotations in JPA have a fetch property. This

property can take on one of two values: FetchType.EAGER or

FetchType.LAZY. FetchType.EAGER means that

the field is loaded by the JPA implementation before it returns the persistent

object to you. Whenever you retrieve an entity from a query or from the

EntityManager, you are guaranteed that all of its eager

fields are populated with datastore data.

FetchType.LAZY is a hint to the JPA runtime that you want to

defer loading of the field until you access it. This is called lazy

loading. Lazy loading is completely transparent; when you attempt to

read the field for the first time, the JPA runtime will load the value from the

datastore and populate the field automatically. Lazy loading is only a hint and

not a directive because some JPA implementations cannot lazy-load certain field

types.

With a mix of eager and lazily-loaded fields, you can ensure that commonly-used fields load efficiently, and that other state loads transparently when accessed. As you will see in Section 3, “ Persistence Context ”, you can also use eager fetching to ensure that entites have all needed data loaded before they become detached at the end of a persistence context.

Note

OpenJPA can lazy-load any field type. OpenJPA also allows you to dynamically change which fields are eagerly or lazily loaded at runtime. See Section 7, “ Fetch Groups ” in the Reference Guide for details.

The Reference Guide details OpenJPA's eager fetching behavior in Section 8, “ Eager Fetching ”.

Use the Embedded marker annotation on embeddable field

types. Embedded fields are mapped as part of the datastore record of the

declaring entity. In our sample model, Author and

Company each embed their Address,

rather than forming a relation to an Address as a

separate entity.

The equivalent XML element is embedded, which expects a

single attribute:

name: The name of the field or property. This attribute is required.

When an entity A references a single entity

B, and other As might also reference the same

B, we say there is a many to one

relation from A to B. In our sample

model, for example, each magazine has a reference to its publisher. Multiple

magazines might have the same publisher. We say, then, that the

Magazine.publisher field is a many to one relation from magazines to

publishers.

JPA indicates many to one relations between entities with the

ManyToOne annotation. This annotation has the following properties:

Class targetEntity: The class of the related entity type.CascadeType[] cascade: Array of enum values defining cascade behavior for this field. We explore cascades below. Defaults to an empty array.FetchType fetch: Whether to load the field eagerly (FetchType.EAGER) or lazily (FetchType.LAZY). Defaults toFetchType.EAGER. See Section 2.6.1, “ Fetch Type ” above for details on fetch types.boolean optional: Whether the related object must exist. Iffalse, this field cannot be null. Defaults totrue.

The equivalent XML element is many-to-one. It accepts the

following attributes:

name: The name of the field or property. This attribute is required.target-entity: The class of the related type.fetch: One ofEAGERorLAZY.optional: Boolean indicating whether the field value may be null.

We introduce the JPA EntityManager in

Chapter 8,

EntityManager

. The EntityManager

has APIs to persist new entities, remove (delete) existing

entities, refresh entity state from the datastore, and merge detached

entity state back into the persistence context. We explore all of

these APIs in detail later in the overview.

When the EntityManager is performing the above

operations, you can instruct it to automatically cascade the operation to the

entities held in a persistent field with the cascade property

of your metadata annotation. This process is recursive. The cascade

property accepts an array of CascadeType enum

values.

CascadeType.PERSIST: When persisting an entity, also persist the entities held in this field. We suggest liberal application of this cascade rule, because if theEntityManagerfinds a field that references a new entity during flush, and the field does not useCascadeType.PERSIST, it is an error.CascadeType.REMOVE: When deleting an entity, also delete the entities held in this field.CascadeType.REFRESH: When refreshing an entity, also refresh the entities held in this field.CascadeType.MERGE: When merging entity state, also merge the entities held in this field.

Note

OpenJPA offers enhancements to JPA's CascadeType.REMOVE functionality, including additional annotations to control how and when dependent fields will be removed. See Section 4.2.1, “ Dependent ” for more details.

CascadeType defines one additional value,

CascadeType.ALL, that acts as a shortcut for all of the values above.

The following annotations are equivalent:

@ManyToOne(cascade={CascadeType.PERSIST,CascadeType.REMOVE,

CascadeType.REFRESH,CascadeType.MERGE})

private Company publisher;

@ManyToOne(cascade=CascadeType.ALL) private Company publisher;

In XML, these enumeration constants are available as child elements of the

cascade element. The cascade element is

itself a child of many-to-one. The following examples are

equivalent:

<many-to-one name="publisher">

<cascade>

<cascade-persist/>

<cascade-merge/>

<cascade-remove/>

<cascade-refresh/>

</cascade>

</many-to-one>

<many-to-one name="publisher">

<cascade>

<cascade-all/>

</cascade>

</many-to-one>

When an entity A references multiple B

entities, and no two As reference the same

B, we say there is a one to many relation from

A to B.

One to many relations are the exact inverse of the many to one relations we

detailed in the preceding section. In that section, we said that the

Magazine.publisher field is a many to one relation from magazines to

publishers. Now, we see that the Company.mags field is the

inverse - a one to many relation from publishers to magazines. Each company may

publish multiple magazines, but each magazine can have only one publisher.

JPA indicates one to many relations between entities with the

OneToMany annotation. This annotation has the following properties:

Class targetEntity: The class of the related entity type. This information is usually taken from the parameterized collection or map element type. You must supply it explicitly, however, if your field isn't a parameterized type.String mappedBy: Names the many to one field in the related entity that maps this bidirectional relation. We explain bidirectional relations below. Leaving this property unset signals that this is a standard unidirectional relation.CascadeType[] cascade: Array of enum values defining cascade behavior for the collection elements. We explore cascades above in Section 2.8.1, “ Cascade Type ”. Defaults to an empty array.FetchType fetch: Whether to load the field eagerly (FetchType.EAGER) or lazily (FetchType.LAZY). Defaults toFetchType.LAZY. See Section 2.6.1, “ Fetch Type ” above for details on fetch types.

The equivalent XML element is one-to-many, which includes

the following attributes:

name: The name of the field or property. This attribute is required.target-entity: The class of the related type.fetch: One ofEAGERorLAZY.mapped-by: The name of the field or property that owns the relation. See Section 2, “ Field and Property Metadata ”.

You may also nest the cascade element within a

one-to-many element.

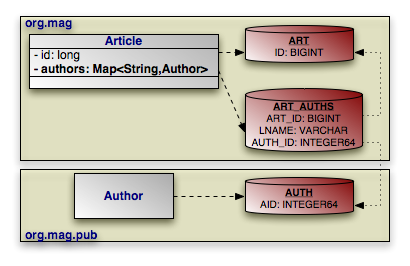

When two fields are logical inverses of each other, they form a

bidirectional relation. Our model contains two bidirectional

relations: Magazine.publisher and Company.mags

form one bidirectional relation, and Article.authors

and Author.articles form the other. In both cases,

there is a clear link between the two fields that form the relationship. A

magazine refers to its publisher while the publisher refers to all its published

magazines. An article refers to its authors while each author refers to her

written articles.

When the two fields of a bidirectional relation share the same datastore

mapping, JPA formalizes the connection with the mappedBy

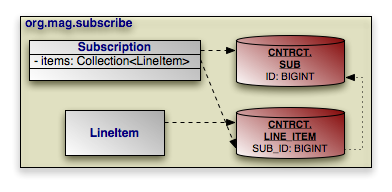

property. Marking Company.mags as mappedBy

Magazine.publisher means two things: